AR-Sandbox is a visually augmented sandbox. It is inspired by SARndbox and was developed for our augmented reality lecture in a team of three students. The Project is available on Github.

Project in Action

Project Setup

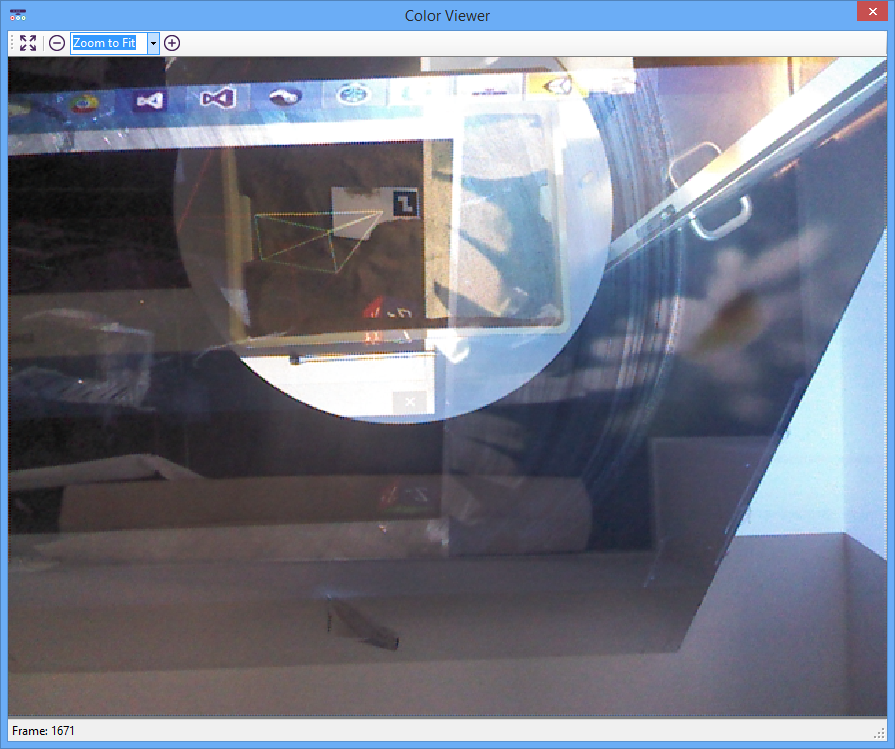

We used a Microsoft Kinect to track the sandbox and a regular projector to project the color image back onto the sandbox. Additional we used a big mirror on the wall for our setup.

Our target was to implement an augmented sandbox application for Windows. We decided to use the Unity3D engine as a base, so we could use its physics and animation capabilities for additional simulations.

To get Kinect working in Unity3D we used the Unity3D Kinect Plugin by Carnegie Mellon University. For image processing we used the AForge.NET Library.

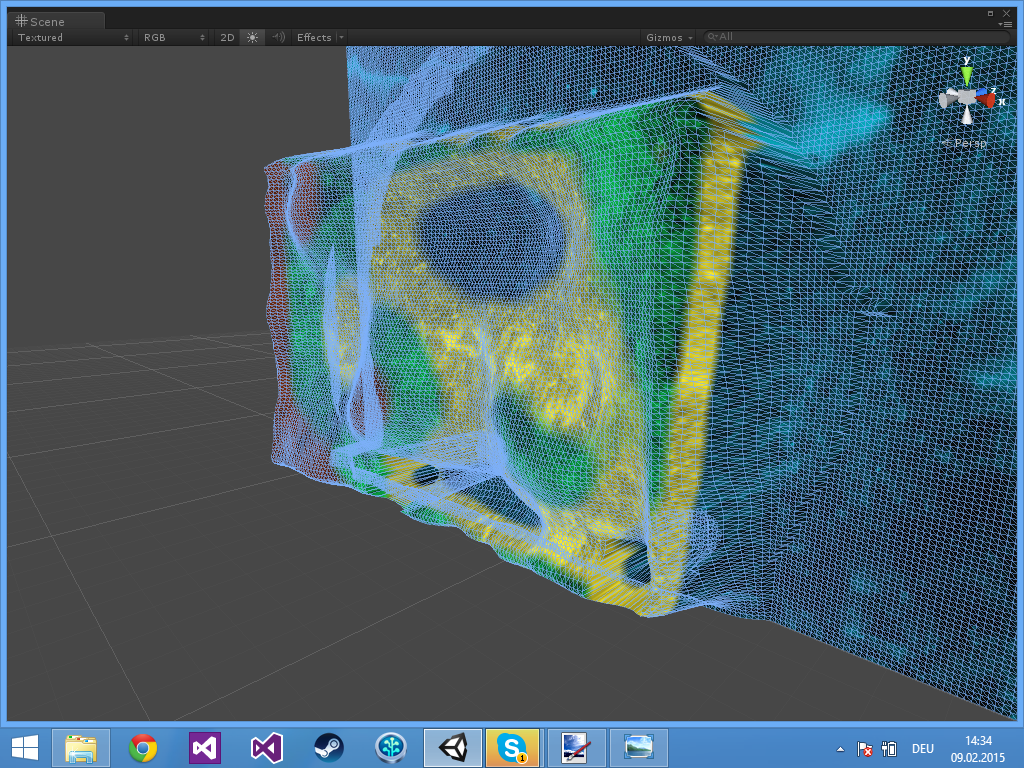

The Kinect depth image is cropped and smoothed through a Gaussian Filter. Afterwards a Mesh is created and updated in Unity3D to represent the sandbox surface. This mesh was intended to be used for physics simulation, but no physics made it into the project. For the color we are using a self written shader, which is blending different layer textures.

Problems

While development we encountered some smaller problems.

Edges of the Box

The semitransparent edges of our sandbox weren’t captured correctly by the Kinect and caused some value spikes. As addition to that the Gaussian Filter wasn’t able to handle those spikes and caused big square in the filtered image. As solution we just taped the edges of the box, so they were captured correctly.

Calibration

For calibration we planned to have a automatic calibration via QR markers. Unfortunately the RGB camera of the Kinect had some problems with the mirror, so we skipped that. The RGB image was way too distorted, so our QR tracking wasn’t able to detect any markers The source code for QR detection is in the project, but the calibration is not implemented. Instead we used simple calibration through mouse clicks and moving the camera in the Unity3D scene around.

Your application is very nice. Application works with unity. it doesn’t work when i build kinect is running but not detecting.

Hi Julian,

The website of downloading the Unity Kinect Wrapper Plugin (CUM) is no longer available(Error 404). Is there any other resource for downloading the Plugin?

Kai Chun Hui, I do not know anything about the “Unity Kinect Wrapper Plugin” but there is an excellent article about the Kinect and Unity:

https://medium.com/@anran1015/unity-kinect-documentation-cf61a25f732

Unity + Kinect Documentation

The aritcle says you must get:

– Kinect Windows SDK v1.7, https://www.microsoft.com/en-us/download/details.aspx?id=36996

– runtime SDK v1.7, https://www.microsoft.com/en-us/download/confirmation.aspx?id=36997

– developer toolkit v1.7. https://www.microsoft.com/en-us/download/details.aspx?id=36998

And you must get the Unity asset:

– Download Kinect with MS-SDK from unity assets store, import everything to the project.

https://assetstore.unity.com/packages/tools/kinect-with-ms-sdk-7747

Also, we made an AR Sandbox for children’s games using Thom Wolfe’s instructions. Our blog is here:

https://catbirdar.wordpress.com/

Hi Julian,

this is a cool project!

I could think of a few cool applications of this in the area of landscape planning etc.

However, I am quite concerned by the announcement that Microsoft has discontinued the Kinect. Looks like any project using this could soon be obsolete once the last second-hand Kinects have been sold…

Are you aware of any hardware alternatives that are equally affordable and could replace the Kinect?

Thanks,

Tobi

Hi Tobi,

thanks! I heavily dislike the discontinuation of the Kinect as well. In my opinion it is a quite powerful device, especially in conjunction with its official SDK.

Anyway there are some hardware alternatives. Microsoft recommends on its Kinect developer site the Intel RealSense cameras. In Addition there is also the Xtion (Pro) by Asus.

I myself did some small in between testing with the Xtion. It is much smaller, lighter and only requires USB 2.0, though has also a smaller camera resolution.

Hi Julian Löhr,

Thank you so much for the GitHub project, I am a university student and I want to reproduce the project with KinectV2 but failed. I read your comment on GitHub: Replace the Plugin with V2 one and change the DepthMesh.cs to retrieve the Depth Image from the new plugin. I searched for the Unity 3D KinectV2 Pugin by Carnegie Mellon University,but didn’t found any useful results,so there are many compiler errors now.I’ll be so grateful if you give me a hand about the project.

AFAIK there is no V2 plugin by Carnegie Mellon University. I was referring to the official Microsoft V2 Unity Plugin that can be found here: https://developer.microsoft.com/en-us/windows/kinect under “NuGet and Unity Pro add-ons”

Just like to add that I never used the V2 plugin yet.

Hi Julian Löhr,

Thank you so much for the GitHub project, I am trying to use your project for school purpose and i’ll be so thankful if you give a brief explanation about how you are building the DepthMesh (DepthMesh.cs).

Also it seems that you only detect mountains and not volcanos, Have you any idea on how to detect a volcano in a depth image (heightmap) ?

Thanks for advance Sir.

Hi,

nice to hear that the project is used somewhere else.

The Depth Mesh is a simple surface reconstruction of the Kinect depth image. Each pixel of the depth image is used as a vertex. Its color value is used as Z-Position, whereas X,Y pixel coordinates are used as X,Y-Position. In addition four adjacent vertices in a square are combined to two faces/triangles. So in a single color depth mesh, it’s a flat grid-like mesh. Then only the Z-Values of the vertices are changed accordingly.

The script handles the setup and buffer allocation in the beginning. Then while running, the Kinect depth image is acquired as a byte array and the vertices of the mesh are modified. Though we clamp the color value, crop the image and add some Gaussian filter.

We do not detect any mountains. The Shader simply does a texture blend according to the vertex/pixel Z-Value. Which is kind of just like coloring and displaying the height map or depth image.

My first guess to detect volcanoes would be to try to detect rings or small spots in the height map.

Julian! Thank you so much for the Github page and videos and clear explanation. At Nova Labs, a maker space in Reston, Virginia, (nova-labs.org) we are starting to build an AR Sandbox like the UC Davis one. However, they use Linux and I was hoping we could find Windows software to do the same thing. But YOU are the only person I found who created AR Sandbox with Windows Unity. Is that true?

At the time of doing the project, we did some research and indeed didn’t came up with any other Windows Sandbox. As the project is now almost 2.5-3 years old, i don’t know if that still holds true.

Anyway feel free to use the AR-Sandbox as it is or as base for your own project. If you have any further questions, i’d be glad to help out.

Julian! We found another person who did AR Sandbox on Windows. It’s Magic Sand. https://github.com/thomwolf/Magic-Sand and they are using OpenFrameworks.

its great work

kinetic and how much more appropriate from the sandbox,Sometimes does not show blue。mindepth and maxdepth

Improper。

Hi, i am sorry, but i am unable to understand you comment 🙁

ohh its great work you done.here i have some questions. is it possible to make rain, water flow, and volccano eruption, in this.because as i seen SARnbox it can did some these and some not.i read several article to implement water flow simulation but still i dont know how can i implement through this.can you through some light

Hi, thanks for your comment 😀

The project is using Unity3D, which is a full-fledged 3D Game-Engine. It does have a physics system, although they don’t have native flow simulation, AFAIK.

So you would need to implement such stuff yourself or look for other projects that already implements it for Unity3D. Unity3D has a very active and huge community, so you’ll likely find something.

thanx for your instant reply. if I put projector and kinect up side down and remove mirror than what type of change I have to do in code or is it work fine without change.

It may somehow “work”. But when you remove the mirror my guess is the Beamer Ouput and/or Kinect Input is flipped. I can’t remember whether we had a boolean switch for that, but i don’t think so.

If you have mirrored/flipped issues, see DepthMesh.cs, thats the script, that fetches the Depth-Image from the Kinect and generates the vertices for the Mesh. Otherwise check the “Scaling” properties of the Mesh-Gameobject in the scene. Maybe we had a negative value set up to flip it.

Another issue is going to be the finetuning values for the Depth-Mesh. We cropped the Value Range and set the value range according to our lowest sand point and the highest sand point. So you need to adjust those value, depending on how far away the Kinect is from the Sandbox and how much sand you have (how deep you can dig or raise the sand).